Load Distribution Design for Applications in Amazon AWS

Effective load distribution is a cornerstone of designing robust, scalable applications in the cloud. Amazon Web Services (AWS) provides several strategies and tools to ensure your application can handle varying loads efficiently. This guide explores key load distribution models and best practices for implementing them in AWS.

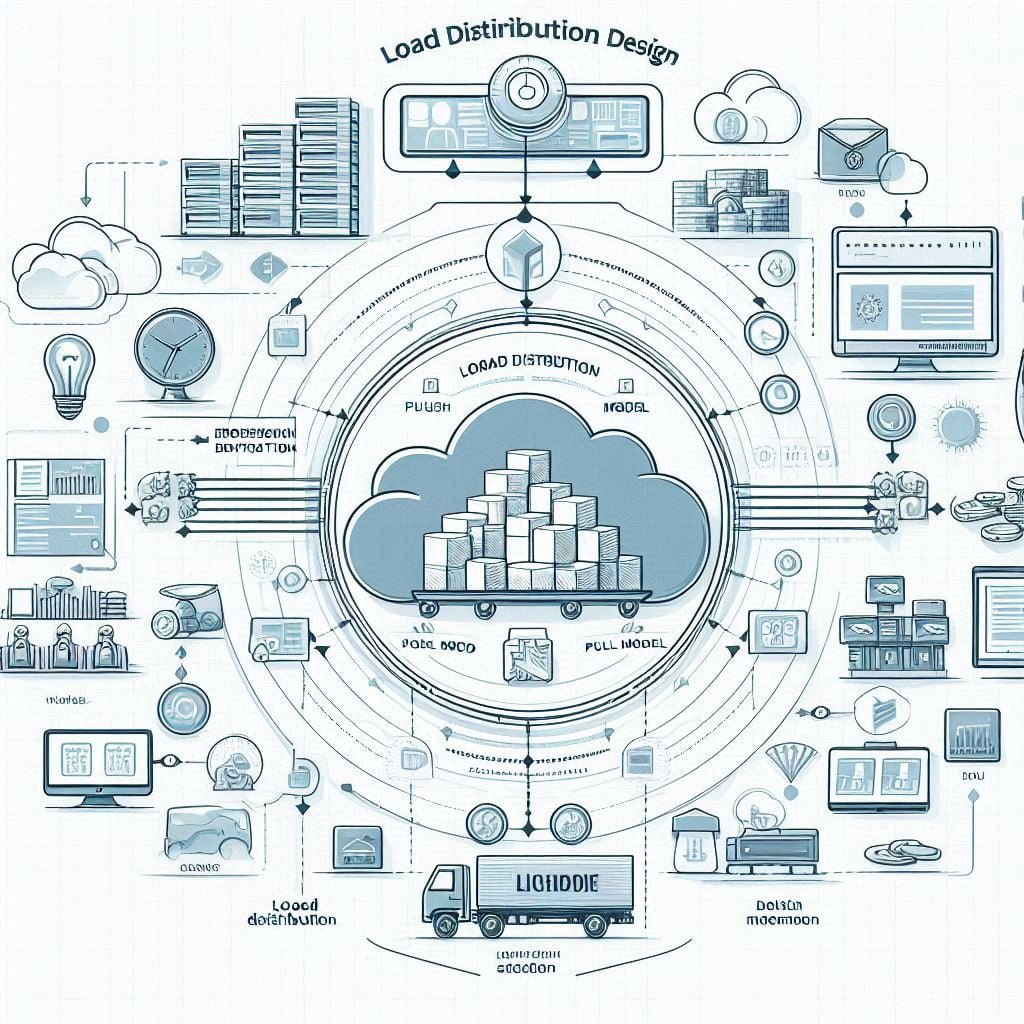

Understanding Load Distribution

Load distribution involves spreading incoming traffic across multiple resources to prevent any single resource from becoming overwhelmed. This not only enhances application performance but also ensures high availability and reliability.

Load Distribution Models

In AWS, load distribution can be achieved using two primary models: the Push Model and the Pull Model.

Push Model

In the Push Model, a central dispatcher or load balancer distributes incoming requests to multiple backend servers. AWS provides several services to implement this model:

- Elastic Load Balancing (ELB): Automatically distributes incoming application traffic across multiple targets, such as EC2 instances, containers, and IP addresses. ELB supports three types of load balancers:

- Application Load Balancer (ALB): Ideal for HTTP and HTTPS traffic, ALB operates at the application layer (OSI model layer 7) and offers advanced routing features based on request attributes.

- Network Load Balancer (NLB): Designed for high performance and low latency, NLB operates at the transport layer (OSI model layer 4) and handles TCP traffic.

- Classic Load Balancer (CLB): Operates at both the application and network layers and is suitable for simple load balancing of HTTP/HTTPS and TCP traffic.

The Push Model is suitable for web applications, API endpoints, and services where a central point of control for traffic distribution is beneficial.

Pull Model

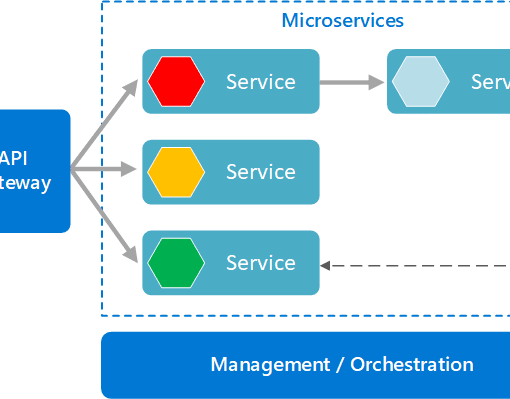

In the Pull Model, worker nodes pull tasks from a shared queue as they become available. This model is particularly effective for distributed processing and batch jobs. AWS services that support this model include:

- Amazon Simple Queue Service (SQS): A fully managed message queuing service that enables decoupling and scaling of microservices, distributed systems, and serverless applications. Worker nodes poll SQS for messages (tasks) and process them independently.

- Amazon Kinesis: A platform for real-time data processing. Kinesis streams allow multiple consumers to process data in parallel, pulling data as it becomes available.

- AWS Lambda: While Lambda can be used in a Push Model, it also supports a Pull Model when integrated with services like SQS and Kinesis, where it automatically triggers functions to process messages or data records.

The Pull Model is ideal for scenarios requiring distributed processing, such as data analytics, order processing systems, and any application where tasks can be processed asynchronously.

Best Practices for Load Distribution

To implement effective load distribution in AWS, consider the following best practices:

- Design for Statelessness:

- Whenever possible, design your applications to be stateless, meaning they do not store session-specific data on individual servers. This simplifies scaling and load balancing, as any server can handle any request.

- Use Auto Scaling:

- Configure Auto Scaling groups to automatically adjust the number of instances in response to traffic patterns. This ensures your application can handle varying loads without manual intervention.

- Monitor and Optimize:

- Use AWS CloudWatch to monitor the performance of your load balancers and backend instances. Set up alarms and automated responses to address potential issues before they impact users.

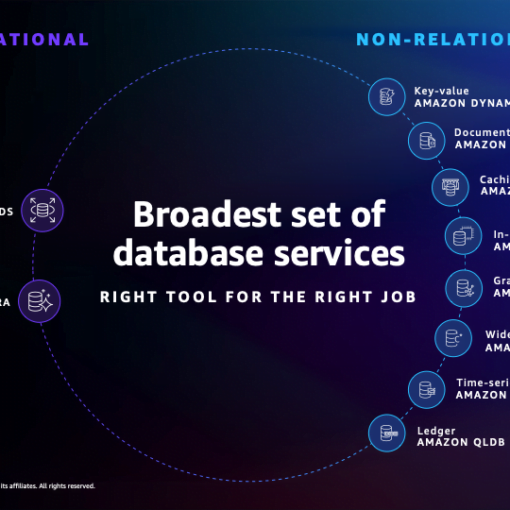

- Leverage Caching:

- Implement caching mechanisms using services like Amazon CloudFront (content delivery network) and Amazon ElastiCache (in-memory data store) to reduce load on backend servers and improve response times.

- Ensure High Availability:

- Distribute your resources across multiple Availability Zones (AZs) to ensure high availability and fault tolerance. ELB and other AWS services support multi-AZ deployments to enhance resilience.

- Security Considerations:

- Secure your load balancers and backend resources by implementing AWS Identity and Access Management (IAM) policies, encrypting data in transit and at rest, and using security groups and network ACLs to control traffic flow.

Effective load distribution is essential for building scalable, high-performance applications in AWS. By leveraging AWS’s robust load balancing and distributed processing services, and following best practices, you can ensure your application remains responsive, reliable, and capable of handling fluctuating traffic demands.