Designing Scalable Applications in Amazon AWS

Building applications that can grow seamlessly with increasing users, data, and traffic is crucial for success in cloud environments. Amazon Web Services (AWS) offers robust strategies for designing highly scalable systems that can handle varying workloads over time.

Importance of Scalability

Scalability is a critical factor in cloud-based application design. It ensures that your infrastructure can expand to accommodate growth and handle additional loads without performance degradation.

In the realm of scalability, there are two primary approaches:

Vertical Scaling (Scale Up)

Vertical scaling involves enhancing the capacity of a single server or resource by adding more CPU, RAM, storage, or network capabilities. While effective for certain applications, it has limitations, such as a ceiling on resource expansion and the risk of a single point of failure.

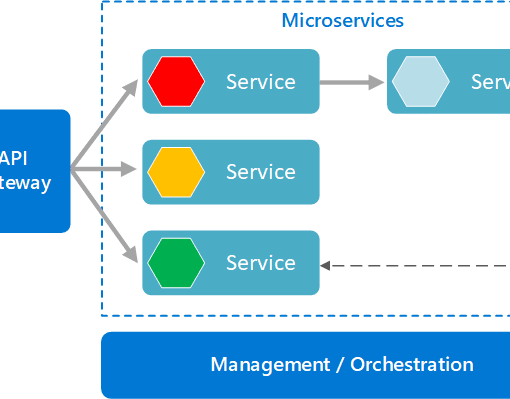

Horizontal Scaling (Scale Out)

Horizontal scaling increases capacity by adding more instances or nodes to distribute the workload. This approach offers virtually limitless scalability potential and enables the creation of elastic systems that can scale dynamically based on application demands.

However, not all applications naturally support horizontal scaling. Stateful applications, for instance, require managing session or state information across nodes, which can complicate scaling efforts.

Stateless vs. Stateful Applications

Stateless applications are designed to function without relying on session-specific data stored on individual nodes. This design facilitates seamless scaling as any node can handle requests without affecting user experience. Examples include static websites or applications that store session data in managed databases like Amazon DynamoDB or files in highly available storage such as Amazon S3 or Amazon EFS.

For stateful applications that cannot be made stateless due to inherent requirements or legacy constraints, session affinity strategies like sticky sessions can help maintain user continuity across specific nodes. However, this approach may limit load balancing efficiency.

Load Distribution Strategies

Once a scalable system is in place, efficient load distribution among nodes becomes crucial. Two primary strategies include:

- Push Method: Nodes receive tasks or data directly from a central coordinator, suitable for real-time data processing scenarios.

- Pull Method: Nodes independently fetch tasks or data from a shared repository, ideal for distributed processing of batch jobs or large datasets.

Distributed Processing

In scenarios requiring extensive data processing, distributed processing techniques come into play. This involves breaking down large tasks into smaller, manageable jobs processed in parallel across multiple servers:

- Distributed Data Processing: Utilizes frameworks like Apache Hadoop or Amazon EMR to manage and process large datasets across distributed environments.

- Real-Time Data Streams: Amazon Kinesis facilitates the partitioning and processing of continuous data streams across multiple computing resources within your server infrastructure.

Designing scalable applications in AWS involves adopting horizontal scaling strategies, ensuring applications can handle increasing demands while maintaining performance and reliability. By leveraging AWS services like DynamoDB, S3, EFS, and Kinesis for state management and distributed processing, organizations can build robust, scalable systems that align with evolving business needs.